Scalable Data Engineering for IT Success

- Claude Paugh

- Aug 7, 2025

- 4 min read

Updated: Aug 7, 2025

In today’s fast-paced digital landscape, building scalable data solutions is no longer a luxury but a necessity. As someone deeply involved in data engineering, I’ve witnessed firsthand how the right infrastructure can transform an organization’s ability to leverage data effectively. The challenge lies not just in managing data but in creating systems that grow seamlessly with your business needs. This post explores the essential components of scalable data engineering and how they contribute to long-term IT success.

Understanding Scalable Data Engineering

Scalability in data engineering means designing systems that can handle increasing volumes, velocity, and variety of data without compromising performance or reliability. It’s about future-proofing your data architecture so that as your business expands, your data infrastructure can keep pace without costly overhauls.

To achieve this, we focus on several key principles:

Modularity: Building components that can be independently scaled or replaced.

Automation: Reducing manual intervention to improve efficiency and reduce errors.

Flexibility: Supporting diverse data types and sources.

Resilience: Ensuring systems can recover quickly from failures.

For example, consider a retail company experiencing rapid growth in online sales. Their data pipeline must accommodate spikes in transaction data during peak shopping seasons without slowing down analytics or reporting. By implementing scalable data solutions, they can dynamically allocate resources and maintain smooth operations.

Building Blocks of Scalable Data Solutions

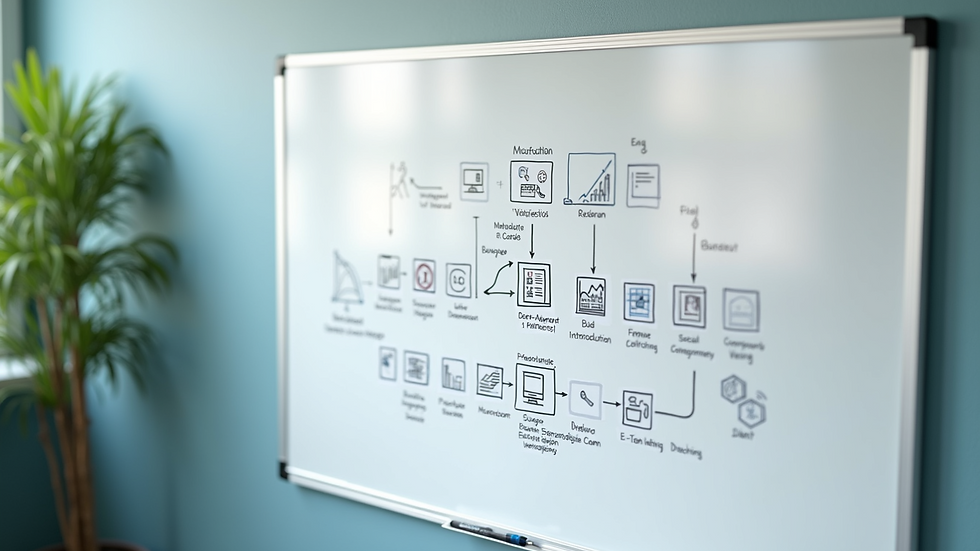

When we talk about scalable data solutions, it’s important to break down the architecture into manageable layers. Each layer plays a critical role in ensuring the system can grow efficiently:

Data Ingestion

This is the entry point where raw data flows into your system. Scalable ingestion pipelines use technologies like Apache Kafka or AWS Kinesis to handle high-throughput, real-time data streams. They also support batch processing for less time-sensitive data.

Data Storage

Choosing the right storage solution is crucial. Distributed file systems like HDFS or cloud storage options such as Amazon S3 provide elasticity and durability. Data lakes and warehouses must be designed to scale horizontally, allowing you to add storage and compute power as needed.

Data Processing

Processing frameworks like Apache Spark or Flink enable scalable transformation and analysis of large datasets. These tools support parallel processing, which is essential for handling big data workloads efficiently.

Data Governance and Security

As data scales, governance becomes more complex. Implementing role-based access controls, encryption, and audit trails ensures compliance and protects sensitive information.

Data Consumption

Finally, scalable solutions must deliver data to end-users and applications reliably. APIs, dashboards, and reporting tools should be designed to handle concurrent access without degradation.

By carefully architecting each layer, businesses can build robust systems that adapt to changing demands.

Practical Steps to Implement Scalable Data Solutions

Building scalable data systems can seem daunting, but breaking the process into actionable steps helps. Here’s a practical roadmap I recommend:

Assess Current Infrastructure

Start by evaluating your existing data architecture. Identify bottlenecks, single points of failure, and areas lacking automation.

Define Scalability Goals

What growth do you anticipate? Define clear metrics such as data volume, query response times, and user concurrency targets.

Choose the Right Tools

Select technologies that align with your goals. Cloud-native services often offer built-in scalability and reduce operational overhead.

Design for Modularity

Build loosely coupled components that can be scaled independently. For example, separate ingestion from processing and storage layers.

Automate Workflows

Use orchestration tools like Apache Airflow or AWS Step Functions to automate data pipelines and reduce manual errors.

Implement Monitoring and Alerts

Continuous monitoring helps detect performance issues early. Set up alerts for anomalies in data flow or system health.

Prioritize Data Governance

Establish policies for data quality, security, and compliance. Scalable systems must maintain trustworthiness as they grow.

Iterate and Optimize

Scalability is not a one-time project. Regularly review system performance and optimize based on evolving business needs.

By following these steps, you can build a scalable data foundation that supports your organization’s growth and innovation.

Why Scalable Data Solutions Matter for Long-Term IT Success

Investing in scalable data solutions is an investment in your organization’s future. Here’s why it matters:

Cost Efficiency

Scalable systems allow you to pay for resources as you grow, avoiding upfront capital expenses and reducing waste.

Agility

When your data infrastructure can adapt quickly, you can respond faster to market changes and new opportunities.

Improved Decision-Making

Reliable, timely data enables better analytics and insights, driving smarter business strategies.

Risk Mitigation

Scalable architectures with built-in redundancy and governance reduce the risk of data loss, breaches, and compliance failures.

Competitive Advantage

Organizations that harness scalable data solutions can innovate faster and deliver superior customer experiences.

At Perardua Consulting, the goal is to help businesses build these strong, scalable data foundations. By partnering with experts who understand the nuances of data architecture and governance, companies can transform their data capabilities and ensure smooth, compliant operations.

Building scalable data solutions is a journey, not a destination. It requires thoughtful planning, the right technology choices, and ongoing commitment. But the payoff is clear: a resilient, efficient, and future-ready data infrastructure that powers IT success and business growth.